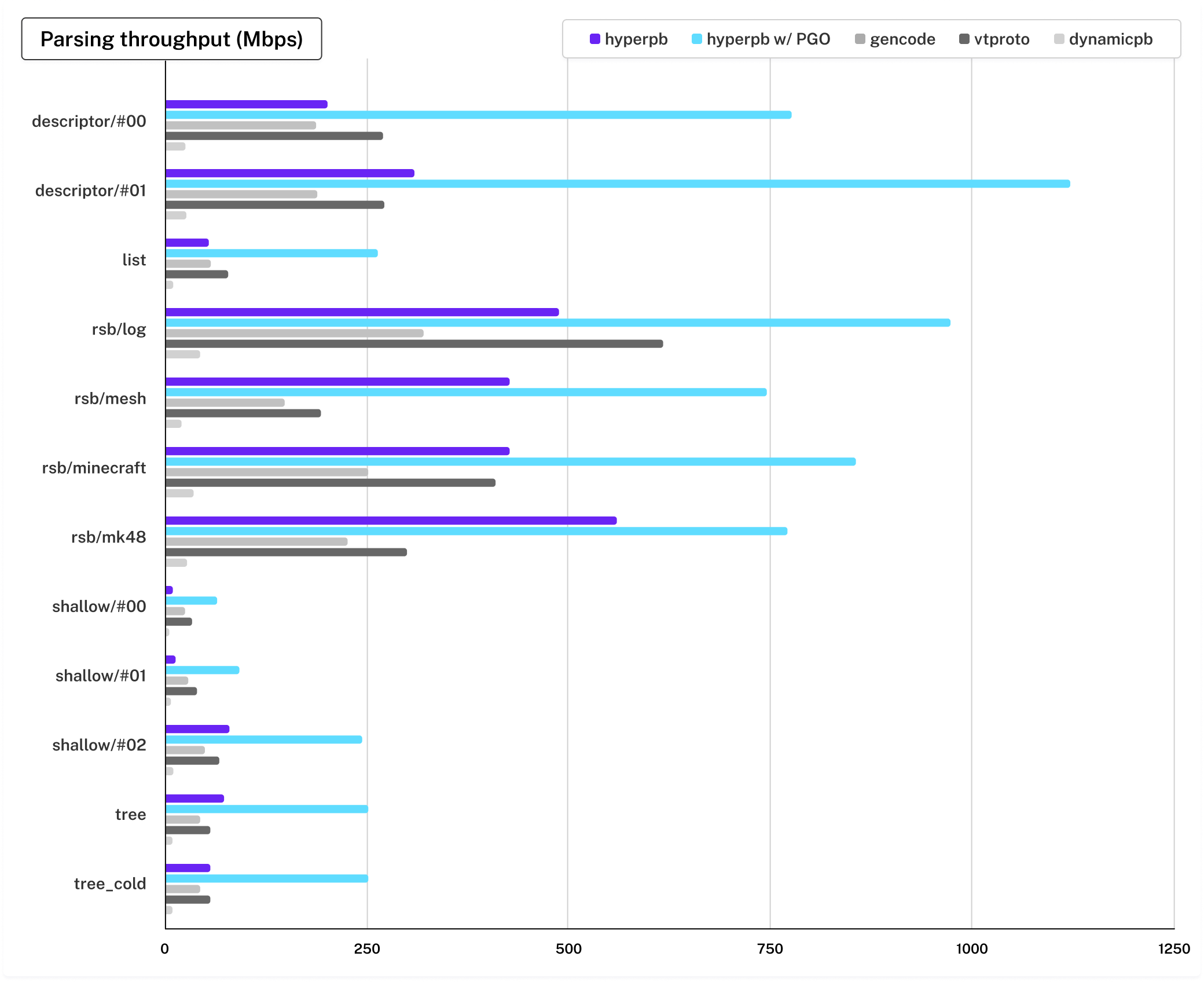

Today we’re announcing public availability of hyperpb, a fully-dynamic Protobuf parser that is 10x faster than dynamicpb, the standard Go solution for dynamic Protobuf. In fact, it’s so efficient that it’s 3x faster than parsing with generated code! It also matches or beats vtprotobuf’s generated code at almost every benchmark, without skimping on correctness.

Don’t believe us? We think our parsing benchmarks speak for themselves.

Here, we show two benchmark variants for hyperpb: out-of-the-box performance with no optimizations turned on, and real-time profile-guided optimization (PGO) with all optimizations we currently offer enabled.

This may seem like a niche issue. However, at Buf we believe that schema-driven development is the future, and this means enabling services that are generic over all Protobuf message types.

Building a dynamic Protobuf parser with the throughput to match (or outperform) ahead-of-time generated code unlocks enormous possibilities. Products that were previously not possible at scale become ordinary, even essential.

Specifically, hyperpb enables us to process and validate large amounts of arbitrary streamed data in a type-aware manner. This is a bottleneck we encountered while building Bufstream.

We have long been vocal about client-side validation in the world of Kafka. The downstream costs of invalid data slipping into a topic are very high, since it introduces server-side failure modes — but the high compute cost of broker-side validation is often cited as the reason for mitigating data corruption on an ongoing basis. This is the real reason that broker-side, schema-aware validation isn’t a big-ticket item for the big cloud players: they can’t figure it out.

But we can’t accept the status quo.

We built Bufstream to enable broker-side validation with Protobuf, an industry standard for high-performance, schema-enforced serialization. We also maintain Protovalidate, the gold-standard semantic validation library for Protobuf. In a nutshell, Bufstream uses schemas to parse incoming data from our customers, and runs Protovalidate on the result, to determine whether or not the data producer sent us a bad message. The poor state of dynamic Protobuf parsing would otherwise make this process slow and resource-intensive.

Fortunately, Buf employs most of the world’s Protobuf experts. One of them is Miguel Young de la Sota, a compiler engineer who previously worked on Protobuf’s compiler, as well as the C++ and Rust runtimes. Seeing a major optimization opportunity, he set out to solve this problem in Go, once and for all.

The result is hyperpb, capable of handling all proto2, proto3, and editions-mode schemas, with a perfect match against Protobuf Go.

hyperpb requires you to compile a parser at runtime, much like a regular expression library. The compilation step is pretty slow, because it’s an optimizing compiler!

Messages built from the compilation output can be used like any other Protobuf message type. They can be manipulated using reflection, much like dynamicpb.Message.

package main

import (

"fmt"

"log"

"buf.build/go/hyperpb"

"google.golang.org/protobuf/proto"

weatherv1 "buf.build/gen/go/bufbuild/hyperpb-examples/protocolbuffers/go/example/weather/v1"

)

// Byte slice representation of a valid *weatherv1.WeatherReport.

var weatherDataBytes = []byte{

0x0a, 0x07, 0x53, 0x65, 0x61, 0x74, 0x74, 0x6c,

0x65, 0x12, 0x1d, 0x0a, 0x05, 0x4b, 0x41, 0x44,

0x39, 0x33, 0x15, 0x66, 0x86, 0x22, 0x43, 0x1d,

0xcd, 0xcc, 0x34, 0x41, 0x25, 0xd7, 0xa3, 0xf0,

0x41, 0x2d, 0x33, 0x33, 0x13, 0x40, 0x30, 0x03,

0x12, 0x1d, 0x0a, 0x05, 0x4b, 0x48, 0x42, 0x36,

0x30, 0x15, 0xcd, 0x8c, 0x22, 0x43, 0x1d, 0x33,

0x33, 0x5b, 0x41, 0x25, 0x52, 0xb8, 0xe0, 0x41,

0x2d, 0x33, 0x33, 0xf3, 0x3f, 0x30, 0x03,

}

func main() {

// Compile a type for your message. Make sure to cache this!

// Here, we're using a compiled-in descriptor.

msgType := hyperpb.CompileMessageDescriptor(

(*weatherv1.WeatherReport)(nil).ProtoReflect().Descriptor(),

)

// Allocate a fresh message using that type.

msg := hyperpb.NewMessage(msgType)

// Parse the message, using proto.Unmarshal like any other message type.

if err := proto.Unmarshal(weatherDataBytes, msg); err != nil {

// Handle parse failure.

log.Fatalf("failed to parse weather data: %v", err)

}

// Use reflection to read some fields. hyperpb currently only supports access

// by reflection. You can also look up fields by index using fields.Get(), which

// is less legible but doesn't hit a hashmap.

fields := msgType.Descriptor().Fields()

// Get returns a protoreflect.Value, which can be printed directly...

fmt.Println(msg.Get(fields.ByName("region")))

// ... or converted to an explicit type to operate on, such as with List(),

// which converts a repeated field into something with indexing operations.

stations := msg.Get(fields.ByName("weather_stations")).List()

for i := range stations.Len() {

// Get returns a protoreflect.Value too, so we need to convert it into

// a message to keep extracting fields.

station := stations.Get(i).Message()

fields := station.Descriptor().Fields()

// Here we extract each of the fields we care about from the message.

// Again, we could use fields.Get if we know the indices.

fmt.Println("station:", station.Get(fields.ByName("station")))

fmt.Println("frequency:", station.Get(fields.ByName("frequency")))

fmt.Println("temperature:", station.Get(fields.ByName("temperature")))

fmt.Println("pressure:", station.Get(fields.ByName("pressure")))

fmt.Println("wind_speed:", station.Get(fields.ByName("wind_speed")))

fmt.Println("conditions:", station.Get(fields.ByName("conditions")))

}

}hyperpb.CompileFileDescriptorSet calls into hyperpb’s compiler, which lays out optimized structs for each message type to minimize memory usage (not unlike the new Opaque API, but more aggressive). It also generates a program for hyperpb’s parser, a carefully-optimized VM that interprets encoded Protobuf messages as its bytecode.

hyperpb also includes advanced features such as manual memory re-use, performance tuning knobs, “unsafe” modes, and profile-guided optimization. Profile-guided optimization in particular allows hyperpb to automatically tune its parser to the shape of data passing through your system in real time. This allows us to achieve another 50-100% more throughput.

hyperpb is a novel advancement in the state of the art for parsing Protobuf in pure Go. We developed a new approach to table-driven parsing, a paradigm first explored as a performance strategy by UPB, a small C kernel for Protobuf runtimes.

So why release it into open source? Doesn’t this risk that our competitors might use it? We considered hyperpb a trade secret for a long time, and didn’t plan to open source it until recently. We changed our minds for a few reasons.

First, wider Protobuf adoption only helps our business and our mission. We have cultivated a reputation as the stewards of Protobuf in the industry. If you use Protobuf, you’ve at least heard of us. The largest companies in the world trust us to solve their Protobuf needs, be that with the Buf CLI, the BSR, Connect RPC, or Protovalidate.

When orgs try to send Protobuf over Kafka, sooner or later they’ll be talking to us, no matter what our competitors are selling; Protobuf will always be one of our core competencies. Redpanda, Confluent — come and get it. Getting more people on board with Protobuf and gRPC will always make us bigger in the end, and we’d love to open a dialogue with more of your customers.

Second, hyperpb is by far not our only innovation; we’ve been looking into this problem since last year, and internally we’re working on pushing the bleeding edge beyond what we’re releasing today. Thanks to hyperpb’s design, we can continue to improve its compiler and optimizations as we learn more about the types of schemas our customers need; much like a programing language compiler improves with feedback from its users.

Finally, our business is growing quickly, and we always have more irons in the fire than people to handle them. If your job is boring and novel work is of interest, get in touch at https://buf.build/careers. We’d love to hear from you.

hyperpb is not magic; we’ve gone to great lengths to keep as much of the codebase understandable as possible (within the constraints of performance requirements). Miguel has a technical overview of hyperpb on his performance optimization blog. Additionally, in future posts, we look forward to discussing topics related to hyperpb’s implementation, including:

If you’d like to stay informed, subscribe to future blog posts, and watch and star hyperpb on GitHub. Also feel free to join our Slack to chat with us!